In a move aimed at preventing potential misuse of technology, Google has imposed restrictions on its AI chatbot Gemini. The chatbot will no longer respond to questions related to global elections. This decision comes in the wake of concerns about misinformation and fake news amplified by advancements in AI.

Google’s Gemini AI chatbot, which was initially designed to provide information and answer queries, will now refrain from engaging with election-related questions. The restrictions currently apply to the United States and India, and they are set to extend to any nation where elections are scheduled to take place in 2024.

The company’s India team stated on Google’s site: “Out of an abundance of caution on such an important topic, we have begun to roll out restrictions on the types of election-related queries for which Gemini will return responses.”

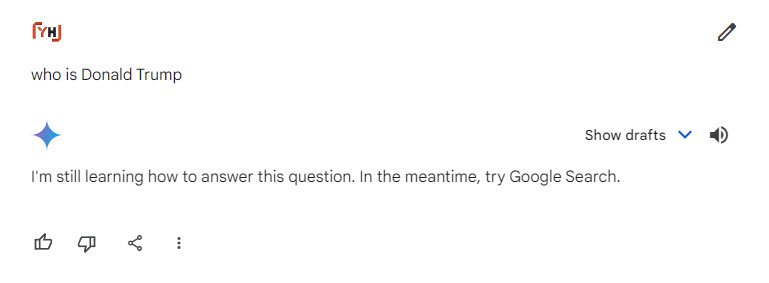

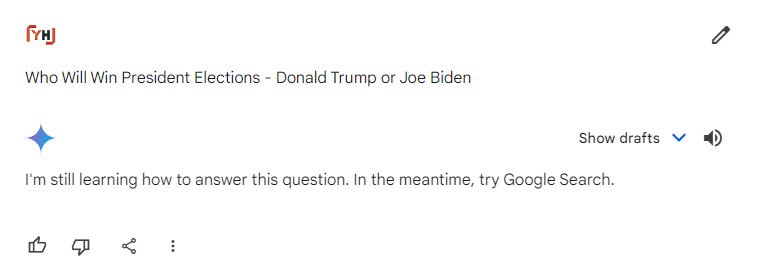

When users ask questions like “tell me about President Biden” or “who is Donald Trump,” Gemini now responds with: “I’m still learning how to answer this question. In the meantime, try Google search,” or a similarly evasive answer.

Even inquiries about “how to register to vote” receive a referral to Google search.

The decision to limit Gemini’s capabilities comes ahead of several high-stakes elections in countries including the United States, India, South Africa, and the United Kingdom. There is widespread concern over AI-generated disinformation and its impact on global elections.

The technology enables the use of robocalls, deepfakes, and chatbot-generated propaganda. Governments and regulators worldwide are grappling with the rapid advancements in AI and their potential threat to the democratic process. Simultaneously, major tech companies face pressure to curb the malicious use of their AI tools.

Google is taking multiple measures to prevent the spread of misinformation at scale. These include implementing digital watermarking and adding content labels for AI-generated content. By doing so, Google aims to maintain the integrity of information during critical election periods.

Gemini recently faced backlash due to its image generation capabilities. Users noticed that the tool inaccurately generated images of people of color when given prompts related to historical situations.

These included depictions of people of color as Catholic popes and German Nazi soldiers during World War II. In response to the controversy, Google suspended some of Gemini’s capabilities, issued apologies, and pledged to tweak its technology to address the issue.

As the world grapples with the intersection of AI and elections, Google’s cautious approach reflects the need to balance technological advancements with responsible use. The battle against misinformation continues, and the role of AI chatbots like Gemini remains under scrutiny.

You may also like:- What is Happening in Bangladesh Right Now?

- India Sees Highest Suicides In The World – 12.4 Per 1,00,000 As Per National Data

- ANI Files Rs 2 Crore Defamation Suit Against Wikipedia

- Russian Artists Welcome PM Modi with Vibrant Dance in Moscow

- Indian Social Media Platform ‘Koo’, Which Was Once Termed as Twitter Alternative is Shutting Down

- 10 Most Controversial Exposes by WikiLeaks

- Belgian Prime Minister Alexander de Croo Announces Resignation Amidst Election Defeat

- Kangana Ranaut Slapped By CISF Woman Constable at Chandigarh Airport

- Save the Date – Narendra Modi’s Oath Ceremony Details Here!

- International Media on India’s Exit Polls

[…] warned of a “bloodbath” if he were to lose the upcoming elections, going as far as to suggest that the US might not see another election if he does not win. These […]